i4Q Edge Workloads Placement and Deployment¶

General Description¶

i4QEW is a toolkit for deploying and running AI workloads on edge computing environment, prevalent in manufacturing facilities, using a Cloud/Edge architecture. i4QEW provides interfaces and capabilities for running different workloads on different industrial devices, efficiently on the edge, including placement and deployment services. The design and implementation of this solution tackles the challenges of scalability and heterogeneity into account right from the beginning. There is a tight coordination required between this task and the Scalable Policy-based Model Distribution from Cloud to Edge (implemented in the solution i4QAI: AI Models Distribution to the Edge solution), such that the AI workload and model shall meet and collaborate in the correct edge target nodes. This solution resides at the back-end infrastructure level, providing capabilities to be used by other solutions and pilots. This solution supports the placement and deployment of AI workloads of many kinds on edge nodes running several varieties of orchestrators based on the underlying heterogeneous devices.

Features¶

Take placement decisions and deploy AI workloads from the cloud (or a data centre) to the edge, where they are expected to use locally available models. The AI workloads distribution is coordinated with the model distribution and deployment mechanism to ensure that the right workloads gest to interact with the right AI model.

Help deploy AI workloads at the edge in scale. To enable that, a policy based deployment pattern can be used via associating policies and labels with the potentially different target nodes.

Manages lifecycle of AI workloads, from creation at the cloud, initial deployment at the edge, and re-deployment when necessary.

Support policy-based placement mechanism that eases the task of the administrator by enabling the specification of rules for eligible targets in a simplified manner.

Support GitOps based mode of operation. The connection point between the AI application developer and the application administrator and deployer is achieved via Git. New resources that get pushed into Git are retrieved to be deployed on the target nodes associated with the requested labels.

Support the deployment of workloads with Red Hat Advanced Cluster Management for Kubernetes (RHACM). ACM serves as the basis for enabling deployment operations at scale.

Support AI workloads packaged in a container. The orchestration engine is in charge of running the workload, and takes care of corresponding lifecycle operations, such as rolling updates.

Operate seamlessly well for small to very large multi-site infrastructure common in smart manufacturing environments.

Support lightweight orchestration engines such as k3s. Thus, it can be deployed and made operational over a variety of host devices and architectures, from high to low footprint artefacts

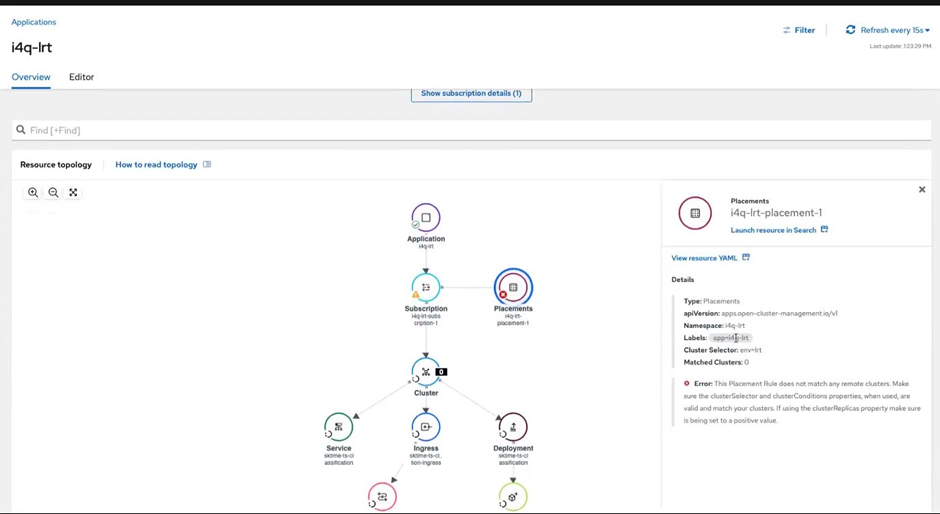

ScreenShots¶

Comercial Information¶

License¶

TBD

Pricing¶

TBD

Associated i4Q Solutions¶

Required¶

Can operate without the need for another i4Q solution

Optional¶

i4Q AI Models Distribution to the Edge

Installation Guidelines¶

System Requirements¶

Access to a RHACM instance

Access to a VM that can run K3s

Provisioning a K3s cluster¶

Deploy the cluster with the following command:

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="server --write-kubeconfig-mode 644" sh

Attaching K3s cluster to RHACM¶

Steps taken from importing-a-target-managed-cluster-to-the-hub-cluster The K3s cluster must be able to connect to the RHACM instance to be imported

Open the ACM UI

Open Infrastructure -> Clusters (path ACM-URL/multicloud/clusters)

Click Import Cluster

Enter a cluster name (it is recommended that your choice is expressive of the cluster)

Click “Save import and generate code”

Copy the command generated and run it on your K3s cluster

The imported cluster should appear as “Ready” under Infrastructure -> Clusters within minutes

Deploying an application on the RHACM instance¶

If your application must pull container images from private repositories then a deployment-level/service-account level docker-configuration access secret must be deployed, and referenced in deployment/service-account

User Manual¶

This section describes the steps necessary to deploy a model on an ACM instance, and having ACM propagate and deploy the model to the connected managed cluster. #. Deploy docker authentication secret on your edge K3s cluster:

Configure:

Gitlab user:

export GITLAB_USER=...

Docker-config access token for the private containers repository:

export DOCKER_READ_TOKEN=...

Secret name (referenced in deployment, e.g., i4qregistry)

export SECRET_NAME=...Create secret:

kubectl create secret docker-registry $SECRET_NAME --docker-server=registry.gitlab.com --docker-username=$GITLAB_USER --docker-password=$DOCKER_READ_TOKEN -n i4q-lrt --dry-run=client -o yaml | kubectl apply -f -

Through RHACM UI:

Visit Applications UI (/applications)

Click on “Create Application” then “Subscription”

Enter Info:

Name: <i4q-lrt>

Namespace: <i4q-lrt>

Repository type: select Git

URL: <https://gitlab.com/i4q/LRT>

Username: your gitlab user

Access token: a gitlab token of yours with READ api permissions

Branch: <acm-dev>

Path: <charts/sktime>

- Scroll down to “Deploy application resources only on clusters matching specified labels”:

Label name: <env>

Label value: <lrt>

Click “Save”

Getting the application deployed on a Managed-Cluster¶

The above application is bounded to a placement rule that selects managed clusters with label env=lrt. To add the label to your managed clusters, visit Infrastructure/Clusters in the ACM UI, or run:

kubectl label managedcluster <cluster-name> env=lrt

Accessing the application¶

If port 80 is accessible on your K3s cluster’s VM, you should be able to access the application through http://CLUSTER_PUBLIC_IP_OR_DNS/api/ui